import numpy as np

import matplotlib.pyplot as plt

import os

import tensorflow as tf

from tensorflow.keras import datasets, layers, models, utilsDeep learning excels at performing complex machine learning tasks. In this post, we will use neural networks to classify images. For this example, we will be using a dataset with images of cats and dogs to train a model that can differentiate between them.

First, we will begin with our import statements.

Next, we will extract the data from the internet and define the parameters that we are using for the input data. After this is done, we can separate our data into train, test, and validation sets.

# location of data

_URL = 'https://storage.googleapis.com/mledu-datasets/cats_and_dogs_filtered.zip'

# download the data and extract it

path_to_zip = utils.get_file('cats_and_dogs.zip', origin=_URL, extract=True)

# construct paths

PATH = os.path.join(os.path.dirname(path_to_zip), 'cats_and_dogs_filtered')

train_dir = os.path.join(PATH, 'train')

validation_dir = os.path.join(PATH, 'validation')

# parameters for datasets

BATCH_SIZE = 32

IMG_SIZE = (160, 160)

# construct train and validation datasets

train_dataset = utils.image_dataset_from_directory(train_dir,

shuffle=True,

batch_size=BATCH_SIZE,

image_size=IMG_SIZE)

validation_dataset = utils.image_dataset_from_directory(validation_dir,

shuffle=True,

batch_size=BATCH_SIZE,

image_size=IMG_SIZE)

# construct the test dataset by taking every 5th observation out of the validation dataset

val_batches = tf.data.experimental.cardinality(validation_dataset)

test_dataset = validation_dataset.take(val_batches // 5)

validation_dataset = validation_dataset.skip(val_batches // 5)Downloading data from https://storage.googleapis.com/mledu-datasets/cats_and_dogs_filtered.zip

68606236/68606236 [==============================] - 1s 0us/step

Found 2000 files belonging to 2 classes.

Found 1000 files belonging to 2 classes.# allows the data to be rapidly read

AUTOTUNE = tf.data.AUTOTUNE

train_dataset = train_dataset.prefetch(buffer_size=AUTOTUNE)

validation_dataset = validation_dataset.prefetch(buffer_size=AUTOTUNE)

test_dataset = test_dataset.prefetch(buffer_size=AUTOTUNE)Let’s try to visualize some of our data before we build our model. Below, we will define a function that shows three pictures of dogs and three pictures of cats from the data set.

def show_cats_and_dogs(dataset):

plt.figure(figsize=(10,5))

# loop through tensor

for images, labels in dataset.take(1):

# separate images by label

cats = images[labels==0]

dogs = images[labels==1]

# create plots for cats

for i in range(3):

plt.subplot(2,3,i+1)

plt.imshow(cats[np.random.randint(0,len(cats))]/255)

plt.axis('off')

# create plots for dogs

for i in range(3):

plt.subplot(2,3,i+4)

plt.imshow(dogs[np.random.randint(0,len(dogs))]/255)

plt.axis('off')

plt.show()

# visualize the data

show_cats_and_dogs(train_dataset)

The line of code below will create an iterator called `labels`.labels_iterator= train_dataset.unbatch().map(lambda image, label: label).as_numpy_iterator()WARNING:tensorflow:From /usr/local/lib/python3.8/dist-packages/tensorflow/python/autograph/pyct/static_analysis/liveness.py:83: Analyzer.lamba_check (from tensorflow.python.autograph.pyct.static_analysis.liveness) is deprecated and will be removed after 2023-09-23.

Instructions for updating:

Lambda fuctions will be no more assumed to be used in the statement where they are used, or at least in the same block. https://github.com/tensorflow/tensorflow/issues/56089Now that we have seen what our data set looks like, we need to determine the baseline to compare our model against. Our baseline would be the accuracy the classifier would achieve by guessing. In order to determine the baseline, we will need to determine the proportion of cats and dogs in the data set.

# create counters for cats and dogs

num_cats = 0

num_dogs = 0

# loop the labels and count the number of cats and dogs

for label in labels_iterator:

if label == 0:

num_cats += 1

elif label == 1:

num_dogs += 1

# show results

print("Number of cat images in the training data:", num_cats)

print("Number of dog images in the training data:", num_dogs)Number of cat images in the training data: 1000

Number of dog images in the training data: 1000Since there is a 50/50 split between the two classes, the baseline model would have an accuracy of 50%. Thus, we would expect a good model to perform far better than this.

element = next(iter(train_dataset))

# print shape of image tensor

image = element[0]

print("Shape:", image.shape)Shape: (32, 160, 160, 3)The code above shows us the shape of the tensor. We can now use this information for the input shape to build our first model. We will use Conv2D layers, MaxPooling2D layers, a Flatten layer, a one Dense layer, and a one Dropout layer in this model.

model1 = models.Sequential([

layers.Conv2D(32, (3, 3), activation='relu', input_shape=(160, 160, 3)),

layers.MaxPooling2D((5, 5)),

layers.Conv2D(32, (3, 3), activation='relu'),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(32, (3, 3), activation='relu'),

layers.Flatten(),

layers.Dense(32, activation='relu'),

layers.Dropout(0.1),

layers.Dense(2)

])model1.compile(optimizer='adam',

loss = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics = ['accuracy'])

history = model1.fit(train_dataset,

epochs=20,

validation_data=validation_dataset)Epoch 1/20

63/63 [==============================] - 5s 50ms/step - loss: 2.8102 - accuracy: 0.5040 - val_loss: 0.7026 - val_accuracy: 0.5260

Epoch 2/20

63/63 [==============================] - 3s 49ms/step - loss: 0.6856 - accuracy: 0.5575 - val_loss: 0.6952 - val_accuracy: 0.5248

Epoch 3/20

63/63 [==============================] - 4s 65ms/step - loss: 0.6599 - accuracy: 0.5765 - val_loss: 0.7723 - val_accuracy: 0.5149

Epoch 4/20

63/63 [==============================] - 3s 48ms/step - loss: 0.6419 - accuracy: 0.6185 - val_loss: 0.7105 - val_accuracy: 0.5507

Epoch 5/20

63/63 [==============================] - 3s 48ms/step - loss: 0.6452 - accuracy: 0.6095 - val_loss: 0.7281 - val_accuracy: 0.5223

Epoch 6/20

63/63 [==============================] - 4s 66ms/step - loss: 0.6128 - accuracy: 0.6225 - val_loss: 0.8110 - val_accuracy: 0.5495

Epoch 7/20

63/63 [==============================] - 3s 49ms/step - loss: 0.5839 - accuracy: 0.6430 - val_loss: 0.7377 - val_accuracy: 0.5322

Epoch 8/20

63/63 [==============================] - 3s 49ms/step - loss: 0.5400 - accuracy: 0.7020 - val_loss: 0.9747 - val_accuracy: 0.5520

Epoch 9/20

63/63 [==============================] - 4s 64ms/step - loss: 0.4993 - accuracy: 0.7210 - val_loss: 0.9249 - val_accuracy: 0.5421

Epoch 10/20

63/63 [==============================] - 3s 49ms/step - loss: 0.4546 - accuracy: 0.7670 - val_loss: 0.9760 - val_accuracy: 0.5334

Epoch 11/20

63/63 [==============================] - 3s 49ms/step - loss: 0.4472 - accuracy: 0.7750 - val_loss: 1.0596 - val_accuracy: 0.5532

Epoch 12/20

63/63 [==============================] - 3s 49ms/step - loss: 0.3819 - accuracy: 0.8140 - val_loss: 1.0049 - val_accuracy: 0.5767

Epoch 13/20

63/63 [==============================] - 3s 48ms/step - loss: 0.4024 - accuracy: 0.7970 - val_loss: 1.0446 - val_accuracy: 0.5606

Epoch 14/20

63/63 [==============================] - 4s 53ms/step - loss: 0.3552 - accuracy: 0.8220 - val_loss: 1.1379 - val_accuracy: 0.5433

Epoch 15/20

63/63 [==============================] - 4s 56ms/step - loss: 0.3055 - accuracy: 0.8605 - val_loss: 1.2468 - val_accuracy: 0.5569

Epoch 16/20

63/63 [==============================] - 3s 48ms/step - loss: 0.3026 - accuracy: 0.8550 - val_loss: 1.1123 - val_accuracy: 0.5569

Epoch 17/20

63/63 [==============================] - 3s 49ms/step - loss: 0.2941 - accuracy: 0.8615 - val_loss: 1.4057 - val_accuracy: 0.5371

Epoch 18/20

63/63 [==============================] - 4s 54ms/step - loss: 0.2682 - accuracy: 0.8785 - val_loss: 1.2205 - val_accuracy: 0.5718

Epoch 19/20

63/63 [==============================] - 3s 47ms/step - loss: 0.2189 - accuracy: 0.9010 - val_loss: 1.3797 - val_accuracy: 0.5569

Epoch 20/20

63/63 [==============================] - 3s 51ms/step - loss: 0.1730 - accuracy: 0.9235 - val_loss: 1.4376 - val_accuracy: 0.5866# plot validation and training accuracy

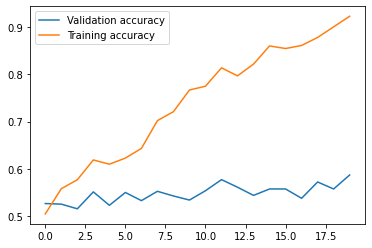

plt.plot(history.history['val_accuracy'], label='Validation accuracy')

plt.plot(history.history['accuracy'], label='Training accuracy')

plt.legend()

plt.show()

The model stabilized at at accuracy of around 55-58% during training. This is a significant improvement from baseline, which is 50%. There does to appear to be overfitting, as the training accuracy tends to be about became significantly higher than the validation accuracy as the amount of epochs increased.

Data Augmentation

Let’s try to improve upon our first model by augmenting the amount of data that is supplied to train the model. Two ways that we can augment data for images would be by rotating or flipping the image. This would allow the model to find invariant features of the input image. Let’s try out some functions to flip and rotate the images below.

plt.imshow(image[0] / 255.0)

plt.show()

# try one flip

flip1 = layers.RandomFlip()(image[0] / 255.0)

plt.imshow(flip1)

plt.show()

# try another flip

flip2 = layers.RandomFlip()(image[0] / 255.0)

plt.imshow(flip2)

plt.show()

plt.imshow(image[0]/ 255.0)

plt.show()

# try one rotation

rot1 = layers.RandomRotation(90)(image[0] / 255.0)

plt.imshow(rot1)

plt.show()

# try another rotation

rot2 = layers.RandomRotation(180)(image[0] / 255.0)

plt.imshow(rot2)

plt.show()

Now that we understand how RandomFlip and RandomRotation work, we can add them as layers to our model. Let’s see how this changes the performance.

model2 = models.Sequential([

layers.RandomFlip("horizontal"),

layers.RandomRotation(30),

layers.Conv2D(32, (3, 3), activation='relu', input_shape=(160, 160, 3)),

layers.MaxPooling2D((3, 3)),

layers.Conv2D(32, (3, 3), activation='relu'),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(64, (3, 3), activation='relu'),

layers.Flatten(),

layers.Dense(64, activation='relu'),

layers.Dropout(0.2),

layers.Dense(2)

])model2.compile(optimizer='adam',

loss = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics = ['accuracy'])

history = model2.fit(train_dataset,

epochs=20,

validation_data=validation_dataset)Epoch 1/20WARNING:tensorflow:Using a while_loop for converting RngReadAndSkip cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting Bitcast cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting Bitcast cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting StatelessRandomUniformV2 cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting ImageProjectiveTransformV3 cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting RngReadAndSkip cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting Bitcast cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting Bitcast cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting StatelessRandomUniformV2 cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting ImageProjectiveTransformV3 cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting RngReadAndSkip cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting Bitcast cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting Bitcast cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting StatelessRandomUniformV2 cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting ImageProjectiveTransformV3 cause there is no registered converter for this op.63/63 [==============================] - 23s 139ms/step - loss: 21.8647 - accuracy: 0.5165 - val_loss: 0.6957 - val_accuracy: 0.5223

Epoch 2/20

63/63 [==============================] - 8s 118ms/step - loss: 0.6935 - accuracy: 0.4910 - val_loss: 0.6887 - val_accuracy: 0.5396

Epoch 3/20

63/63 [==============================] - 8s 130ms/step - loss: 0.6884 - accuracy: 0.5320 - val_loss: 0.6866 - val_accuracy: 0.5446

Epoch 4/20

63/63 [==============================] - 9s 132ms/step - loss: 0.6902 - accuracy: 0.5215 - val_loss: 0.6899 - val_accuracy: 0.5322

Epoch 5/20

63/63 [==============================] - 7s 115ms/step - loss: 0.6887 - accuracy: 0.5460 - val_loss: 0.6902 - val_accuracy: 0.5483

Epoch 6/20

63/63 [==============================] - 8s 130ms/step - loss: 0.6813 - accuracy: 0.5770 - val_loss: 0.6838 - val_accuracy: 0.5780

Epoch 7/20

63/63 [==============================] - 9s 144ms/step - loss: 0.6767 - accuracy: 0.5675 - val_loss: 0.6787 - val_accuracy: 0.5780

Epoch 8/20

63/63 [==============================] - 8s 116ms/step - loss: 0.6754 - accuracy: 0.5575 - val_loss: 0.6897 - val_accuracy: 0.5297

Epoch 9/20

63/63 [==============================] - 8s 120ms/step - loss: 0.6772 - accuracy: 0.5710 - val_loss: 0.6714 - val_accuracy: 0.5965

Epoch 10/20

63/63 [==============================] - 8s 129ms/step - loss: 0.6681 - accuracy: 0.5960 - val_loss: 0.6803 - val_accuracy: 0.5941

Epoch 11/20

63/63 [==============================] - 8s 130ms/step - loss: 0.6862 - accuracy: 0.5585 - val_loss: 0.6922 - val_accuracy: 0.5149

Epoch 12/20

63/63 [==============================] - 7s 115ms/step - loss: 0.6825 - accuracy: 0.5505 - val_loss: 0.6913 - val_accuracy: 0.5557

Epoch 13/20

63/63 [==============================] - 8s 126ms/step - loss: 0.6833 - accuracy: 0.5405 - val_loss: 0.6998 - val_accuracy: 0.5396

Epoch 14/20

63/63 [==============================] - 8s 130ms/step - loss: 0.6875 - accuracy: 0.5475 - val_loss: 0.6902 - val_accuracy: 0.5421

Epoch 15/20

63/63 [==============================] - 7s 115ms/step - loss: 0.6868 - accuracy: 0.5420 - val_loss: 0.7042 - val_accuracy: 0.5458

Epoch 16/20

63/63 [==============================] - 8s 131ms/step - loss: 0.6746 - accuracy: 0.5680 - val_loss: 0.6999 - val_accuracy: 0.5668

Epoch 17/20

63/63 [==============================] - 8s 131ms/step - loss: 0.6813 - accuracy: 0.5510 - val_loss: 0.6944 - val_accuracy: 0.5718

Epoch 18/20

63/63 [==============================] - 9s 142ms/step - loss: 0.6694 - accuracy: 0.5925 - val_loss: 0.6851 - val_accuracy: 0.5619

Epoch 19/20

63/63 [==============================] - 8s 116ms/step - loss: 0.6743 - accuracy: 0.5900 - val_loss: 0.6799 - val_accuracy: 0.5854

Epoch 20/20

63/63 [==============================] - 8s 131ms/step - loss: 0.6664 - accuracy: 0.5795 - val_loss: 0.6881 - val_accuracy: 0.5854# plot validation and training accuracy

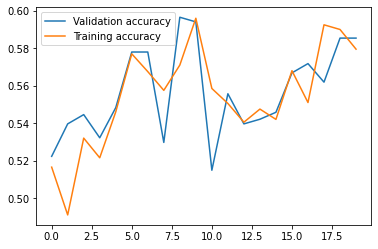

plt.plot(history.history['val_accuracy'], label='Validation accuracy')

plt.plot(history.history['accuracy'], label='Training accuracy')

plt.legend()

plt.show()

The validation accuracy stabilized around 56-58% during training. This is slightly better than what was obtained in model 1. Additionally, the model does not appear to be overfit, as the training and validation accuracies are mostly similar.

Adding a Preprocessing Step

In order to further improve this model, we can also add a preprocessing step. The original data values had the pixels with RGB values between 0 and 255. However, if we normalize the RGB values so that they range from 0 to 1, the model could focus more on trying to identify the features that distinguish the two classes, rather than adjusting for the scaling. Let’s see how preprocessing changes performance.

i = tf.keras.Input(shape=(160, 160, 3))

x = tf.keras.applications.mobilenet_v2.preprocess_input(i)

preprocessor = tf.keras.Model(inputs = [i], outputs = [x])

model3 = models.Sequential([

preprocessor,

layers.RandomFlip("horizontal"),

layers.RandomRotation(30),

layers.Conv2D(32, (3, 3), activation='relu', input_shape=(160, 160, 3)),

layers.MaxPooling2D((3, 3)),

layers.Conv2D(32, (3, 3), activation='relu'),

layers.MaxPooling2D((2, 2)),

layers.Conv2D(64, (3, 3), activation='relu'),

layers.Flatten(),

layers.Dense(64, activation='relu'),

layers.Dropout(0.1),

layers.Dense(2)

])WARNING:tensorflow:Using a while_loop for converting RngReadAndSkip cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting Bitcast cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting Bitcast cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting StatelessRandomUniformV2 cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting ImageProjectiveTransformV3 cause there is no registered converter for this op.model3.compile(optimizer='adam',

loss = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics = ['accuracy'])

history = model3.fit(train_dataset,

epochs=20,

validation_data=validation_dataset)Epoch 1/20WARNING:tensorflow:Using a while_loop for converting RngReadAndSkip cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting Bitcast cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting Bitcast cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting StatelessRandomUniformV2 cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting ImageProjectiveTransformV3 cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting RngReadAndSkip cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting Bitcast cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting Bitcast cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting StatelessRandomUniformV2 cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting ImageProjectiveTransformV3 cause there is no registered converter for this op.63/63 [==============================] - 11s 130ms/step - loss: 0.7200 - accuracy: 0.5520 - val_loss: 0.6747 - val_accuracy: 0.5866

Epoch 2/20

63/63 [==============================] - 7s 114ms/step - loss: 0.6771 - accuracy: 0.5830 - val_loss: 0.6438 - val_accuracy: 0.5990

Epoch 3/20

63/63 [==============================] - 9s 142ms/step - loss: 0.6427 - accuracy: 0.6305 - val_loss: 0.6340 - val_accuracy: 0.6423

Epoch 4/20

63/63 [==============================] - 8s 128ms/step - loss: 0.6482 - accuracy: 0.6290 - val_loss: 0.6398 - val_accuracy: 0.6485

Epoch 5/20

63/63 [==============================] - 7s 114ms/step - loss: 0.6090 - accuracy: 0.6745 - val_loss: 0.6078 - val_accuracy: 0.6597

Epoch 6/20

63/63 [==============================] - 8s 114ms/step - loss: 0.6035 - accuracy: 0.6675 - val_loss: 0.6018 - val_accuracy: 0.6671

Epoch 7/20

63/63 [==============================] - 8s 127ms/step - loss: 0.5919 - accuracy: 0.6790 - val_loss: 0.5797 - val_accuracy: 0.7017

Epoch 8/20

63/63 [==============================] - 8s 118ms/step - loss: 0.5999 - accuracy: 0.6845 - val_loss: 0.5618 - val_accuracy: 0.7178

Epoch 9/20

63/63 [==============================] - 8s 120ms/step - loss: 0.5676 - accuracy: 0.7025 - val_loss: 0.5414 - val_accuracy: 0.7265

Epoch 10/20

63/63 [==============================] - 8s 128ms/step - loss: 0.5508 - accuracy: 0.7145 - val_loss: 0.5528 - val_accuracy: 0.7203

Epoch 11/20

63/63 [==============================] - 9s 147ms/step - loss: 0.5547 - accuracy: 0.7145 - val_loss: 0.5415 - val_accuracy: 0.7203

Epoch 12/20

63/63 [==============================] - 7s 114ms/step - loss: 0.5498 - accuracy: 0.7120 - val_loss: 0.5402 - val_accuracy: 0.7314

Epoch 13/20

63/63 [==============================] - 8s 129ms/step - loss: 0.5476 - accuracy: 0.7200 - val_loss: 0.5388 - val_accuracy: 0.7475

Epoch 14/20

63/63 [==============================] - 8s 129ms/step - loss: 0.5428 - accuracy: 0.7200 - val_loss: 0.5418 - val_accuracy: 0.7277

Epoch 15/20

63/63 [==============================] - 9s 139ms/step - loss: 0.5279 - accuracy: 0.7335 - val_loss: 0.5775 - val_accuracy: 0.7116

Epoch 16/20

63/63 [==============================] - 8s 127ms/step - loss: 0.5294 - accuracy: 0.7185 - val_loss: 0.5155 - val_accuracy: 0.7450

Epoch 17/20

63/63 [==============================] - 8s 129ms/step - loss: 0.5158 - accuracy: 0.7550 - val_loss: 0.5160 - val_accuracy: 0.7525

Epoch 18/20

63/63 [==============================] - 8s 129ms/step - loss: 0.5175 - accuracy: 0.7385 - val_loss: 0.5329 - val_accuracy: 0.7463

Epoch 19/20

63/63 [==============================] - 7s 112ms/step - loss: 0.5070 - accuracy: 0.7555 - val_loss: 0.5097 - val_accuracy: 0.7463

Epoch 20/20

63/63 [==============================] - 8s 128ms/step - loss: 0.5007 - accuracy: 0.7425 - val_loss: 0.5177 - val_accuracy: 0.7426# plot validation and training accuracy

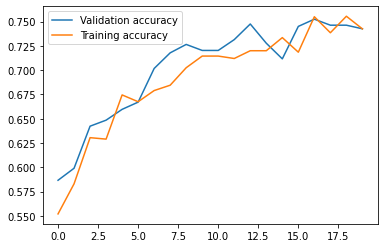

plt.plot(history.history['val_accuracy'], label='Validation accuracy')

plt.plot(history.history['accuracy'], label='Training accuracy')

plt.legend()

plt.show()

The validation accuracy stabilized around 73-74% during training. This is a significant improvement from what was obtained in model 1. The model also does not appear to be overfit, as the training and validation accuracies are similar.

Using Transfer Learning

Although we have achieved a model that can perform above baseline by creating a model from scratch, we could create a much better performing model by using a pre-trained model. In this example we wil use MobileNetV2, a model which has been trained for image classification, as a base layer for our model. We can use the code below to download MobileNetV2 and configure it as a layer.

IMG_SIZE = (160, 160)

i = tf.keras.Input(shape=(160, 160, 3))

x = tf.keras.applications.mobilenet_v2.preprocess_input(i)

preprocessor = tf.keras.Model(inputs = [i], outputs = [x])

IMG_SHAPE = IMG_SIZE + (3,)

base_model = tf.keras.applications.MobileNetV2(input_shape=IMG_SHAPE,

include_top=False,

weights='imagenet')

base_model.trainable = False

i = tf.keras.Input(shape=IMG_SHAPE)

x = base_model(i, training = False)

base_model_layer = tf.keras.Model(inputs = [i], outputs = [x])Downloading data from https://storage.googleapis.com/tensorflow/keras-applications/mobilenet_v2/mobilenet_v2_weights_tf_dim_ordering_tf_kernels_1.0_160_no_top.h5

9406464/9406464 [==============================] - 0s 0us/stepNow that the layer has been created, let’s put this into a model. We will still use our data augmentation techniques, GlobalAveragePooling2D, Dropout, and Dense in addition to the pre-trained base layer that we downloaded. There is also a summary of the model so that we can vizualise the layers in the model.

model4 = models.Sequential([

preprocessor,

layers.RandomFlip("horizontal"),

layers.RandomRotation(180),

base_model_layer,

layers.GlobalAveragePooling2D(),

layers.Dropout(0.2),

layers.Dense(2)

])

model4.summary()WARNING:tensorflow:Using a while_loop for converting RngReadAndSkip cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting Bitcast cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting Bitcast cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting StatelessRandomUniformV2 cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting ImageProjectiveTransformV3 cause there is no registered converter for this op.Model: "sequential_4"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

model (Functional) (None, 160, 160, 3) 0

random_flip_3 (RandomFlip) (None, 160, 160, 3) 0

random_rotation_3 (RandomRo (None, 160, 160, 3) 0

tation)

model_1 (Functional) (None, 5, 5, 1280) 2257984

global_average_pooling2d_3 (None, 1280) 0

(GlobalAveragePooling2D)

dropout_4 (Dropout) (None, 1280) 0

dense_4 (Dense) (None, 2) 2562

=================================================================

Total params: 2,260,546

Trainable params: 2,562

Non-trainable params: 2,257,984

_________________________________________________________________model4.compile(optimizer='adam',

loss = tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True),

metrics = ['accuracy'])

history = model4.fit(train_dataset,

epochs=20,

validation_data=validation_dataset)Epoch 1/20WARNING:tensorflow:Using a while_loop for converting RngReadAndSkip cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting Bitcast cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting Bitcast cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting StatelessRandomUniformV2 cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting ImageProjectiveTransformV3 cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting RngReadAndSkip cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting Bitcast cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting Bitcast cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting StatelessRandomUniformV2 cause there is no registered converter for this op.

WARNING:tensorflow:Using a while_loop for converting ImageProjectiveTransformV3 cause there is no registered converter for this op.63/63 [==============================] - 14s 140ms/step - loss: 0.3827 - accuracy: 0.8285 - val_loss: 0.1085 - val_accuracy: 0.9666

Epoch 2/20

63/63 [==============================] - 9s 141ms/step - loss: 0.2490 - accuracy: 0.8970 - val_loss: 0.0744 - val_accuracy: 0.9777

Epoch 3/20

63/63 [==============================] - 9s 141ms/step - loss: 0.2225 - accuracy: 0.9115 - val_loss: 0.0667 - val_accuracy: 0.9765

Epoch 4/20

63/63 [==============================] - 10s 150ms/step - loss: 0.2077 - accuracy: 0.9115 - val_loss: 0.0751 - val_accuracy: 0.9728

Epoch 5/20

63/63 [==============================] - 8s 129ms/step - loss: 0.2075 - accuracy: 0.9160 - val_loss: 0.0666 - val_accuracy: 0.9740

Epoch 6/20

63/63 [==============================] - 10s 156ms/step - loss: 0.2073 - accuracy: 0.9145 - val_loss: 0.0686 - val_accuracy: 0.9715

Epoch 7/20

63/63 [==============================] - 13s 200ms/step - loss: 0.1973 - accuracy: 0.9190 - val_loss: 0.0645 - val_accuracy: 0.9790

Epoch 8/20

63/63 [==============================] - 9s 142ms/step - loss: 0.1759 - accuracy: 0.9320 - val_loss: 0.0769 - val_accuracy: 0.9678

Epoch 9/20

63/63 [==============================] - 10s 155ms/step - loss: 0.1919 - accuracy: 0.9210 - val_loss: 0.0722 - val_accuracy: 0.9752

Epoch 10/20

63/63 [==============================] - 8s 125ms/step - loss: 0.1714 - accuracy: 0.9315 - val_loss: 0.0646 - val_accuracy: 0.9777

Epoch 11/20

63/63 [==============================] - 10s 146ms/step - loss: 0.1715 - accuracy: 0.9325 - val_loss: 0.0642 - val_accuracy: 0.9802

Epoch 12/20

63/63 [==============================] - 10s 150ms/step - loss: 0.1726 - accuracy: 0.9260 - val_loss: 0.0668 - val_accuracy: 0.9740

Epoch 13/20

63/63 [==============================] - 10s 149ms/step - loss: 0.1676 - accuracy: 0.9335 - val_loss: 0.0656 - val_accuracy: 0.9765

Epoch 14/20

63/63 [==============================] - 10s 159ms/step - loss: 0.1740 - accuracy: 0.9315 - val_loss: 0.0679 - val_accuracy: 0.9765

Epoch 15/20

63/63 [==============================] - 8s 127ms/step - loss: 0.1563 - accuracy: 0.9365 - val_loss: 0.0708 - val_accuracy: 0.9691

Epoch 16/20

63/63 [==============================] - 10s 150ms/step - loss: 0.1687 - accuracy: 0.9335 - val_loss: 0.0654 - val_accuracy: 0.9790

Epoch 17/20

63/63 [==============================] - 10s 152ms/step - loss: 0.1690 - accuracy: 0.9340 - val_loss: 0.0580 - val_accuracy: 0.9814

Epoch 18/20

63/63 [==============================] - 9s 139ms/step - loss: 0.1586 - accuracy: 0.9380 - val_loss: 0.0653 - val_accuracy: 0.9765

Epoch 19/20

63/63 [==============================] - 8s 125ms/step - loss: 0.1615 - accuracy: 0.9390 - val_loss: 0.0738 - val_accuracy: 0.9728

Epoch 20/20

63/63 [==============================] - 13s 202ms/step - loss: 0.1636 - accuracy: 0.9305 - val_loss: 0.0758 - val_accuracy: 0.9715# plot validation and training accuracy

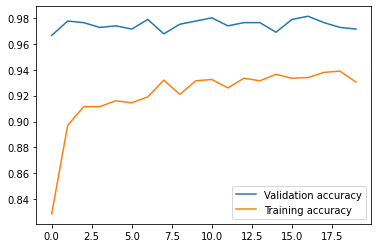

plt.plot(history.history['val_accuracy'], label='Validation accuracy')

plt.plot(history.history['accuracy'], label='Training accuracy')

plt.legend()

plt.show()

The validation accuracy stabilized around 97% during training. This is a a stark improvement from what was obtained in model 1, as the classifier went from slightly better than baseline to near perfect. The model also does not appear to be overfit, as the training accuracy is actually lower than the validation accuracy for all of the epochs.

This model seems to perform very well. Let’s see how it performs on unseen test data.

# Score on test data

loss, acc = model4.evaluate(test_dataset)

print('Test accuracy:', acc)6/6 [==============================] - 0s 41ms/step - loss: 0.0762 - accuracy: 0.9740

Test accuracy: 0.9739583134651184The test accuracy is very high at 97.3%, meaning that our classifier performs very well. Congrats, we have successfully made an image classifier that can differentiate between cats and dogs!